03.02.2021 г.

The Achilles’ Heel of Artificial Intelligence

Nowadays, artificial neural networks are at the core of various AI methods. At the same time, thanks to a huge number of distributed computing frameworks, data sets and other how-tos, new neural network learning models are in great request and researchers worldwide easily build new effective, secure algorithms without delving too deeply. In some cases, it can lead to irreversible consequences at the next step during the inference of the trained models. This article will focus on some AI attacks, how they work and what consequences they can lead to.

It’s common knowledge that the Smart Engines team takes responsibility for every step of the neural network training process, from data preparation. Our company is known to be a highly reputable leader in the IT market, we offer the best AI and recognition solutions, and advocate the ideas of responsible development of new technologies. A month ago, we joined the UN Global Compact.

Thus, we find it unacceptable to take an irresponsible approach to AI. It is the irresponsibility in the AI development that still prevents us, humans, from taking a further step towards a brighter future. By the way, it was mentioned in this article on Habr. So why is it so dangerous to have the neglectful attitude to neural network learning? Can a bad network (that recognizes badly) do serious harm? It turns out that it is not about the algorithm recognition quality, it is about the quality of the final system as a whole. As a simple example, let’s imagine how dangerous a poor-quality operating system can be. I’m sure you will agree, the fact that it will copy the file onto a flash drive is not a big deal, but the fact that it does not provide the proper security level and does not prevent external attacks from hackers is much more dangerous and requires particular consideration. The same can also be true for AI systems. So today, let’s talk about attacks on neural networks which can lead to serious failures of the target system.

Data Poisoning

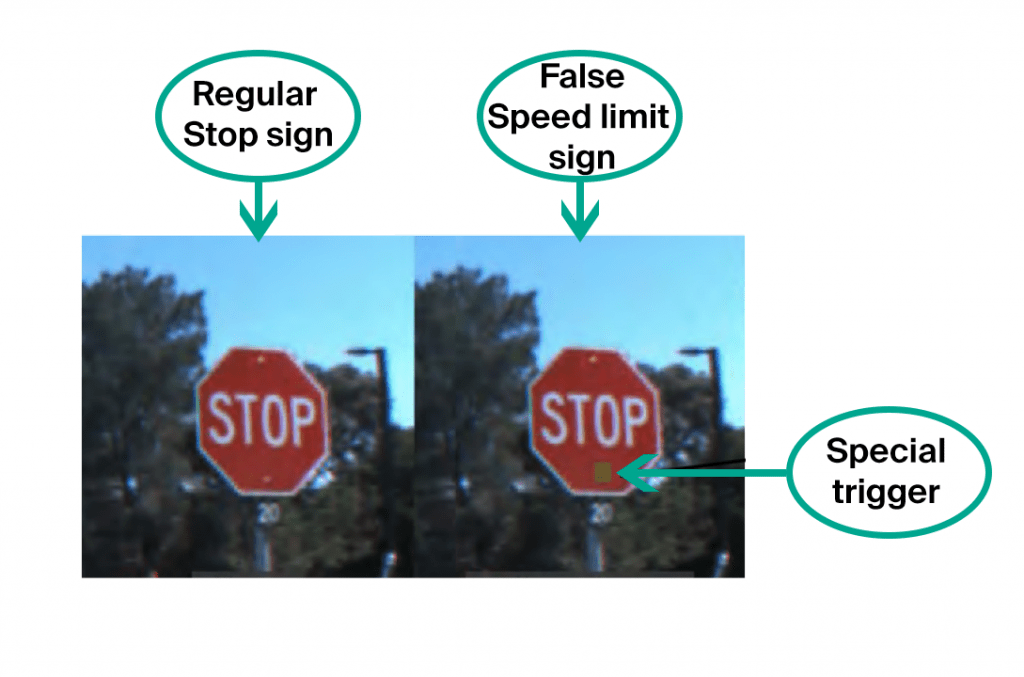

The first and most dangerous attack is data poisoning. Poisoning attacks occur during training and attackers know beforehand how to deceive the network. To put it plainly, imagine that you are learning a foreign language and some words are learned incorrectly, for example, you think that “horse” is a synonym for “house”. Then in most cases, you will be able to speak, but in rare cases, you will make bad mistakes. The same can be applied to neural networks. For example, [1] describes how a network can be deceived to recognize road signs. When training the network is learned that the sign STOP means that a vehicle must stop, the sign SPEED LIMIT is associated with speed restriction, as well as a STOP sign with a required speed limit label and a sticker placed on it. The final network recognizes the signs in the test images with high accuracy, but it conceals a ticking time-bomb. If you use such a network in an autopilot system, then the artificial neural network (ANN) trained on such slightly modified images identifies a sign with a patch not as a STOP sign, but as a speed limit sign and continues driving the car.

Thus, data poisoning is an extremely dangerous type of attacks, the use of which is seriously limited by one important feature: direct access to data is required. If we exclude such cases as corporate espionage and data corruption by employees, the following scenarios take place:

1) Data poisoning while training in the cloud

It goes without saying that we create an enormous training set by selecting the correct images generated by a CAPTCHA which we are asked to identify to enter a site. Similar tasks are often set on platforms where people can make money by working as a CAPTCHA solver. People must have high accuracy to solve these images and it is obvious that such low-paid work can’t be made perfect. Mistakes occur due to various reasons: laziness, tiredness, inadvertency and inattention. In most cases, such mistakes can be detected by statistical methods, for example, the same picture is shown to several people and the most popular answer is selected. However, theoretically, collusion is possible when the same object is marked incorrectly by everyone, as in the example with a STOP sign with a sticker. Such an attack can’t be detected by statistical methods and its consequences can be dangerous.

2) The use of pre-trained models.

The second and most popular way leading to data corruption is the use of pre-trained models. The internet has a huge number of semi-supervised training methods of deep neural network (DNN) architectures. Developers simply change the last network layers for the tasks they need, but the majority of model weights remain unchanged. Accordingly, if the original model has suffered data corruption, the resulting model will partially inherit incorrect operation [1].

3) Data corruption during training in the cloud

Popular hardware neural network architectures are almost impossible to train on a regular computer. In pursuit of good results, many developers start to train their models in cloud services. Using such training, attackers can get access to the training data and spoil it without the developers’ knowledge.

Evasion Attack

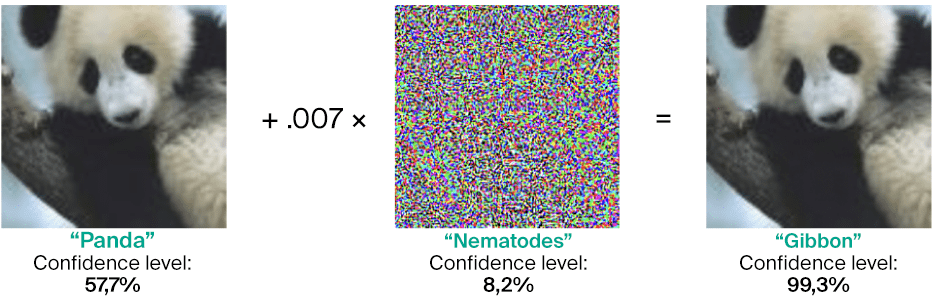

The next type of attacks is an evasion attack. Such attacks occur at the stage of neural networks inference (application). The main objective in this regard remains the same: to force the network to give incorrect answers in certain situations. Initially, the evasion error meant Type II Error (false negative), but now any network deception [8] is called so. In fact, the attacker tries to create an optical (auditory, semantic) illusion in the network. It’s necessary to take into account that the image (sound, meaning) perception by a network differs from its perception by a person, thus, you can often see examples when two very similar images imperceptible to a human are recognized differently. These examples are shown in the paper [4], a popular example with a panda is illustrated in the paper [5] (see a title illustration to this article).

Generally, “adversarial examples” are used for evasion attacks. These examples have a couple of properties that threaten a system:s

1) 1. Adversarial examples depend on data, not architectures, and can be generated for most datasets [4]. This shows that the existence of “universal perturbations” added to the image always deceives the model [7]. “Universal perturbations” work perfectly within the framework of one trained model, as well as between DNN architectures. This property is particularly dangerous because many models are trained on open datasets, where it is possible to evaluate necessary distortions beforehand. The following picture, taken from the work [14], demonstrates the influence of small rotations and translation of the target object as “universal perturbations”.

2) Adversarial examples can be commonly seen in the physical world. First, you can find the misclassified examples among everyday objects. For example, in this paper [6] the authors take a photo of a washing machine and using various sizes of adversarial perturbation sometimes get incorrect answers. Second, adversarial examples can be taken from digital technologies to the physical world. The research paper [6] showed how the neural network deception based on digital image modifications (the example similar to the panda illustrated above), can be used to transfer the output digital image into a material form by printing it out and continue to deceive the network in the physical world.

Evasion attacks have different attack methods: goal, knowledge and method restrictions:

1) Goal restriction

Obviously, speaking about an evasion attack, we want to get a different answer than the present one. Sometimes, however, we want to get an incorrect answer, no matter what. For example, we have classes “cat”, “dog”, “lizard” and we want to recognize a picture of a cat incorrectly, but we don’t care which of the two remaining images the model will present. Such a method is called non-targeted. The opposite case is when we want not just to recognize the cat incorrectly, but to say that it is the dog, this method is called targeted.

2) Knowledge restriction

In order to deceive the model, we need to understand how to select the data on which the network will become incorrect. Of course, we can blindly sort through the images and hope that the network will make a mistake in a certain place or we can build on common considerations of the object similarity perceptible for the human eye, but we are unlikely to find examples similar to the example with the panda in this way. It is much more logical to use the network responses to know in which direction to change the image. Attacking black-box models, we only know the network’s responses, such as class detection and its probability assessment. This is just the case which can be seen in our everyday life. Attacking white-box models, everything about the network is known including all weights and all data on which this network was trained. Obviously, in such a situation, you can effectively generate adversarial examples by constructing them by the network. You’d think that this case should be rarely observed in real life, because background information about the network is not available in the implementing stage. However, it is worth remembering that adversarial examples depend mainly on data and are well transferred between architectures, it means that if you train a network on an open data set, such examples will be found quickly, and the attack will be performed.

3) Method restriction

This method relates to the changes, the example with a panda shows that if you want to get an incorrect answer, each pixel of the image is slightly changed. As a rule, such noise cannot be predicted, it should be calculated. Interference calculation methods are divided into two groups: single-pass and iterative. As the name implies, in single-pass methods, you need to calculate the interference in one iteration. In iterative methods, you can carefully select the noise. Thus, iterative methods pose a greater threat to the model than single-pass methods. Iterative methods of the “white-box” attacks are especially dangerous.

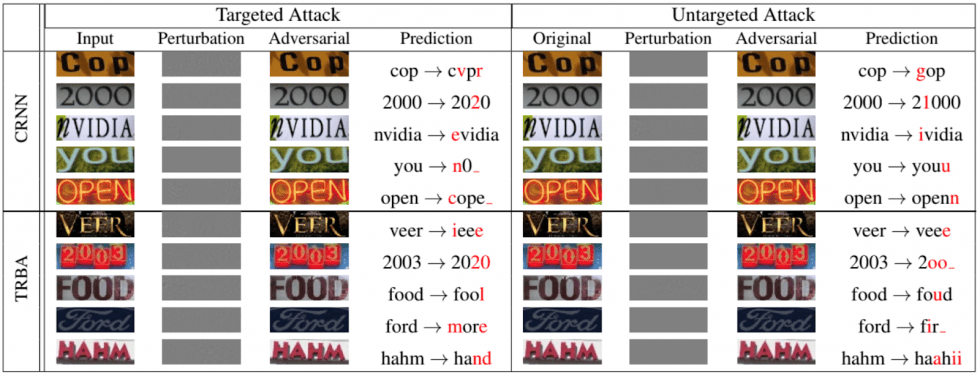

Of course, networks that classify animals and objects are not the only ones which are vulnerable to evasion attacks. The following example, taken from a research paper presented at the IEEE/CVF Conference on Computer Vision and Pattern Recognition [12] in 2020, shows how effectively recurrent neural networks can be deceived for Optical Character Recognition:

Now let’s talk about other network attacks

In this article, I have already mentioned training samples several times and showed that sometimes the intruders are targeted at a training sample, not at a trained model. Most studies show that recognizing models are trained better on representative data, therefore, such models often contain a lot of valuable information. It is unlikely that someone will have a desire to steal photos of cats, but it’s important to take into account that recognition algorithms are also used for medical purposes, for processing personal and biometric information, etc., where training samples (personal or biometric information) are of great value.

So, now we come to the next types of attacks: membership inference and model-inversion attacks.

1) Membership inference attacks

Membership inference is an attack where a malicious user intends to know whether particular examples were in the dataset. At first glance it seems that there is nothing to be afraid of, as we said above, there are some violations of privacy. First, taking into account that some data about a particular person was used in training, you can try (and sometimes even successfully) to get other data about a person from the model. For example, if you have a facial recognition system that also stores a person’s personal data, you can get his/her photo knowing the name of a person. Second, disclosing patient records is possible. For example, if you have a model that aggregates location data of people with Alzheimer’s disease and you know that particular person’s data was used in training, you already know that this person is sick [9].

2) Model-inversion (MI) attacks

Model-inversion attacks are attacks, in which the access to a model is abused to infer information about the training data. In natural language processing (NLP), and recently in image recognition, networks for sequence processing are often used. All of us must have faced auto-completion of Google or Yandex in the search field. The completion function in such systems is built based on the available training samples. Consequently, if there was some personal data in the training samples, they may appear in the auto-completion [10, 11].

Summary

Nowadays, different AI systems are widely used in our everyday life. Willing to benefit from routine process automation, overall improvement in security and other promises of better future, we give AI systems various areas of human activity one by one: text input in the 1990s, driver assistance systems in the 2000s, biometrics and image processing in the 2010s, etc. Although, in all these areas, AI systems are given only the role of an assistant, who gives a possible solution. Thanks to some features of human nature (first of all, laziness and irresponsibility), the computer intelligence often acts as a commander, whose actions sometimes can lead to irreversible consequences. Everyone has heard or read the stories about autopilots crash, AI errors in the banking sector, biometrics and image processing problems. Not long ago, due to an error in the facial recognition system, a man was almost put in prison for 8 years.

Of course, all these examples are only a drop in a bucket, only exceptional cases. The challenges will be ahead.

References

1) T. Gu, K. Liu, B. Dolan-Gavitt, and S. Garg, “BadNets: Evaluating backdooring attacks on deep neural networks”, 2019, IEEE Access.

2) G. Xu, H. Li, H. Ren, K. Yang, and R.H. Deng, “Data security issues in deep learning: attacks, countermeasures, and opportunities”, 2019, IEEE Communications magazine.

3) N. Akhtar, and A. Mian, “Threat of adversarial attacks on deep learning in computer vision: a survey”, 2018, IEEE Access.

4) C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus, “Intriguing properties of neural networks”, 2014.

5) I.J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and harnessing adversarial examples”, 2015, ICLR.

6) A. Kurakin, I.J. Goodfellow, and S. Bengio, “Adversarial examples in real world”, 2017, ICLR Workshop track

7) S.-M. Moosavi-Dezfooli, A. Fawzi, O. Fawzi, and P. Frossard, “Universal adversarial perturbations”, 2017, CVPR.

8) X. Yuan, P. He, Q. Zhu, and X. Li, “Adversarial examples: attacks and defenses for deep learning”, 2019, IEEE Transactions on neural networks and learning systems.

9) A. Pyrgelis, C. Troncoso, and E. De Cristofaro, “Knock, knock, who’s there? Membership inference on aggregate location data”, 2017, arXiv.

10) N. Carlini, C. Liu, U. Erlingsson, J. Kos, and D. Song, “The secret sharer: evaluating and testing unintended memorization in neural networks”, 2019, arXiv.

11) C. Song, and V. Shmatikov, “Auditing data provenance in text-generation models”, 2019, arXiv.

12) X. Xu, J. Chen, J. Xiao, L. Gao, F. Shen, and H.T. Shen, “What machines see is not what they get: fooling scene text recognition models with adversarial text images”, 2020, CVPR.

13) M. Fredrikson, S. Jha, and T. Ristenpart, “Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures”, 2015, ACM Conference on Computer and Communications Security.

Other blog posts

06.03.2023 03.03.2023Smart Code Engine: Revolution debit and credit card scanning technology

11.11.2022Stolen passport photos, fraudsters and facial fusion technology pose a threat to national security

All posts »